An Introduction to Kennel Synchronization

In a shared memory application, developers must ensure that shared resources are protected from concurrent access. The kernel is no exception. Shared resources require protection from concurrent access because if multiple threads of execution access and manipulate the data at the same time, the threads may overwrite each other's changes or access data while it is in an inconsistent state. Concurrent access of shared data is a recipe for instability that often proves hard to track down and debug; getting it right at start is important.

- SMP

- Symmetrical MultiProcessing

- Shared Memory Multiprocessor

- muliprocessors, one shared main memory

Critical Regions and Race Conditions

- Critical Region(Critical Section)

- It cannot be executed by more than one thread

- Atomic

- Operations complete without interruption as if the entire critical region were one individual instruction.

- Race Condition

- It is possible for two threads to be simultaneously executing within the same critical region.

- Race condition do not occur is called synchronization.

Why Do We Need Protection?

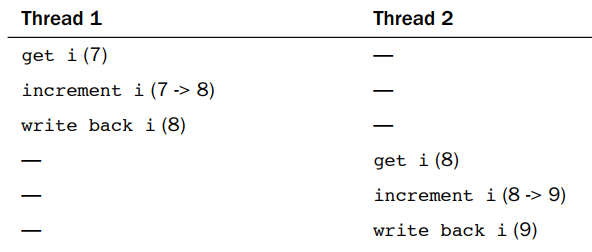

Assume that there are two threads of execution, both enter this critical region, and the initial value of i is 7. The desired outcome is then similar to the following.

As expected, 7 incremented twice is 9. A possible outcome, however, is the following.

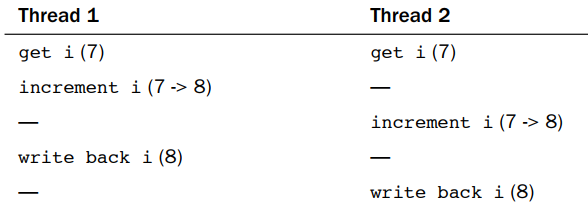

As expected, 7 incremented twice is 9. A possible outcome, however, is the following.

The variable i contains the value 8 when, in fact, it should now contain 9.

The variable i contains the value 8 when, in fact, it should now contain 9.

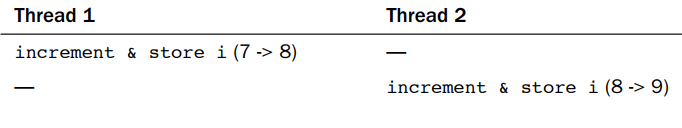

Most processors provide an instruction to atomically read, increment, and write back a single variable. Using this atomic instruction, the only possible outcome is

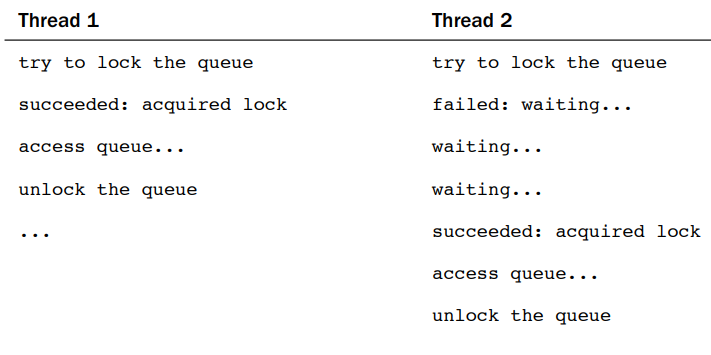

Locking

- It is impossible for architectures to provide atomic instructions to support the indefinitely sized critical regions.

- Lock works much like a lock on a door.

- Locks are advisory and voluntary. Locks are entirely a programming construct that the programmer must take advantage of. Nothing prevents you from writing code that manipulates the fictional without the appropriate lock.

Cause of Concurrency

- Interrupts

- An interrupt can occur asynchronously at almost any time, interrupting the currently executing code.

- Softirqs and tasklets

- The kernel con raise or schedule a softirq or tasklet at almost any time, interrupting the currently executing code.

- Kernel preemption

- Because the kernel is preemptive, one task in the kernel can preempt another.

- Sleeping and synchronization with user-space

- A task in the kernel can sleep and thus invoke the scheduler, resulting in the running of a new process.

- Symmetrical multiprocessing

- Two or more processors can execute kernel code at exactly the same time.

Knowing What to Protect

- Most global kernel data structures need locking.

- lock data, not code

Deadlocks

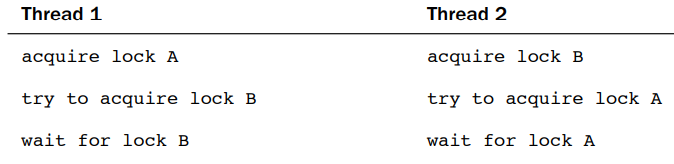

- The threads all wait for each other, but they never make any progress toward releasing the resource that they already held.

- deadly embrace (ABBA deadlock)

- Deadlock-free code

- Implement lock ordering. Nested locks must always be obtained in the same order. This prevents the deadly embrace deadlock. Document the lock ordering so others will follow it.

- Prevent starvation. Ask your self, does this code always finish? If foo does not occur, will bar wait forever?

- Do not double acquire the same lock.

- Design for simplicity. Complexity in your locking scheme invites deadlocks.

Contention and Scalability

- Contention means a lock currently in use but that another thread is trying to acquire.

- Scalability means a expanded system with a large number of processes, processors, or large amount of memory.

- Coarse-grained Lock

- A very coarse lock protects a large amount of data.

- Coarse locking results in poor scalability if there is high lock contention.

- Fine-grained Lock

- A very fine lock protects a small amount of data.

- Find locking results in wasteful overhead if there is little lock contention.